The Bouncing Ball Project began as a final project for my class “Computer Assisted Analysis and Composition,” which was taught by Stratis Minakakis at the New England Conservatory. This class was, among other things, an introduction to coding for composers via the visual programming language OpenMusic. At the time of my project, I was reading “Sweet Anticipation” by David Huron, which, for the uninitiated, is a book discussing the impact of expectation on the human experience of music. It occurred to me that the rhythm of a bouncing ball was a universally familiar rhythm, and that the expectation of how it would sound would be strong for most listeners. I was interested in using this pattern as a rhythmic language for a musical composition, hoping to play with the rhythmic expectations of listeners.

I eventually landed on a particular generative algorithm that semi-scrambles the order of beats of a bouncing ball, then linearly interpolates from a steady pulse to this more chaotic rhythm.

For those interested in the specifics of my algorithm:

- Generate a geometric series (this is the rhythm of a bouncing ball)

- Scramble this geometric series 20 times, creating 20 scrambled series

- Of these series, take the one with the “least scrambling” (ie. the most consecutive values matching the original geometric series, ascending or descending).

- Map this to a rhythm.

- Make shorter beats quieter.

- Now interpolate between this rhythm and a rhythm with the same duration and same number of beats in which each beat has the same duration (evenly spaced).

- Reverse the pattern, thus transitioning from even beats to “bouncing” ones.

You can listen to this composition here:

Despite its simplicity, my professor was quite happy with it, and encouraged me to continue to develop my ideas. And so, my journey with this project continued.

Before any updates to the work itself, I gave a presentation on OpenMusic and my experience creating this work at the Music ✖ Tech Fusion night in Brookline. You can watch the recording here (I explain in more depth how I created the work itself):

Next, I began to think critically about how I could improve this piece. After some reflection, I decided to switch from notated music to an audio-visual piece, utilizing a real bouncing ball for my sound source. This was for a few reasons:

- Stretching audio-clips results in pitch shifting, leading to a more interesting sonic experience.

- An audio-visual representation engages more senses while allowing me to leverage my technical strengths.

- Including a visual of a bouncing ball creates a new way for listeners to engage with the dynamic rhythms; when the source of the rhythm is no longer a mystery, how does a listener engage with it?

- Compositional work that I implemented in my exploration, although aesthetically pleasing, seemed to me to detract from the original purpose of the piece. This approach was an option that could add to the experience without taking away from the intention.

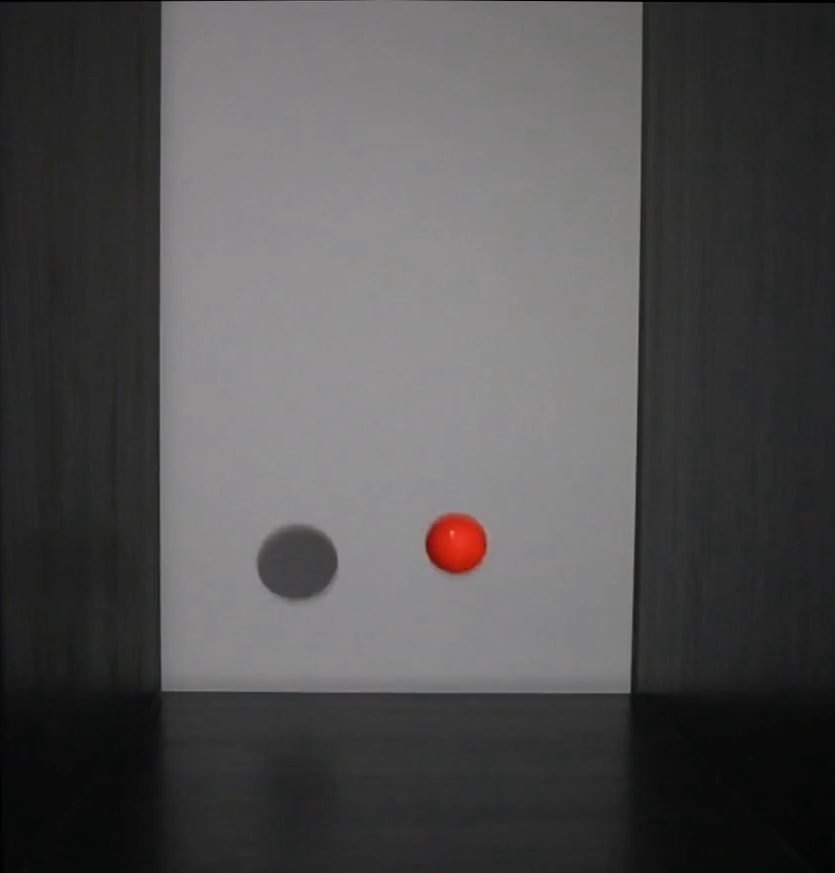

The creation process was relatively smooth. First, I re-implemented the algorithm from OpenMusic in python, utilizing MoviePy for video clipping. Next, I created the video to chop by recording the bounces of a ball inside a black shelf. I did some light editing, then plugged the video into my python script for this final result:

Over time, I came to feel that something was missing; the piece lacked gesture, the human influence. As such, I had the idea to create a controller for my algorithm. My bouncing ball project would become a playable instrument (if perhaps an unwieldy one). I created the following web-based platform for controlling the clips from my video project. Users have control over:

- Randomness – how random the rhythmic scrambling should feel

- Even-ness – whether the rhythms should be more like a real bouncing ball or like a steady pulse

- Speed bias – whether the rhythms should sample more from the early, slow beats, or the late, fast ones.

You can try it out here.

< back to posts